There Is Nothing in Generative AI for Education

please stop telling me that a chatbot can write my lesson plans

Disclaimer: I am in no way well-informed in the technicals of programming and data, and there’s a good chance I misinterpreted my informal research or got stuff wrong. If you do know more about this than me, I’d be only too happy to hear from you what needs correcting.

If you work in education, you know that there have been plenty of professional development opportunities on offer about the use of AI in teaching for the last couple of years. My district in particular is keen on us using it this year, and insists that AI tools can make our jobs easier by writing lessons, rubrics, quizzes, writing prompts, charts, data evaluations, graphic organizers, and differentiated assignments. Students could also use AI tools to assist in research and planning writing assignments, and teachers could incorporate lessons on the ethical and effective use of AI in the classroom. It’s the same miraculous talk of innovation and efficiency that most proponents of increased AI implementation perpetuate: instantaneous time-saver, concise summaries of search results in a digestible format, wide variety of use cases, etc. that has driven seemingly every company to plant an AI button somewhere prominently on its website, with a spin to make it fit into an educational setting by saying some of our magic words. It’s already everywhere in my private use of the internet, and it’s all over the educational tools I use frequently in my classroom. A lot of educators like me are resistant to its use, but others claim that AI has already won over our entire field, and embracing it is inevitable if we don’t want to get left behind the times.

They always like to say that things are inevitable, don’t they? I’m sure it makes them feel like revolutionaries or prophets. I always feel like I’m being held at gunpoint and told that this will be easier if I don’t fight and just let them take the money.

I can see the appeal in wanting an assist, and technology has definitely helped improve and streamline education, though there have been some drawbacks. Teachers have to do a lot, and even with more teachers of my generation prioritizing leaving work at work, extensive hours spent planning and grading is difficult to complete during work hours, alongside handling active teaching time, discipline, and classroom management. For all of the issues with distraction and cheating with technology in classrooms that we have to deal with now, I still wouldn’t want to be a teacher without an internet full of resources at my disposal. It’s a struggle sometimes to come up with things to do in the classroom if resources aren’t provided to you by higher-ups, but having the internet means you have a lot of places to go to find those resources from elsewhere, so much so that you may be spoiled for choice. I’m fortunate that I don’t need to scrounge for my own lesson plans at the public charter school where I currently work, and at previous schools, the majority of my planning time was devoted to trying to figure out how I could find assignments fitting the curriculum plans without spending my own money on Teachers Pay Teachers (and frequently failing). But in that struggle, I also found that there were some things I really liked creating my own plans for, like my mythology elective class that I made a semester of lessons for nearly from scratch. If I wasn’t allergic to ladder-climbing, I may enjoy going into a curriculum development position if I ever wanted to move out of the classroom. There’s a pleasure in knowing that something you made is really yours, the same way there’s pleasure in analyzing and interpreting texts in your own way, or in knowing that you physically built something yourself with your own hands. It’s a pleasure I hope that we can pass down to the next generation as teachers, the worth and value inherent in making things yourself and appreciating the things made by others that no one else could have made in exactly the same way.

This value I hold tends to contradict with the values of Silicon Valley and Wall Street. You cannot calculate the worth and value in these laborious creative activities because it is not quantifiable data. It is subjective and thoroughly human, rooted in whatever your equivalent of a “soul” might be. You may not believe me when I say there is inherent worth and value in doing things yourself; many of my students certainly don’t. But I also can’t quantify the lack of worth and value in not making something yourself, either. It’s something you either feel or you don’t. If a teacher didn’t give you that understanding, your experience in the world might.

Generative AI does not want to demonstrate the value in uniqueness and creativity. It does not have the capability to do so. Generative AI flattens. It tags the words of a written prompt, scans its swaths of data for matching tags without distinguishing one kind of source from another, and tries to predict the conglomerate answer that will please its operator most based on the desires it was programmed with, to the extent that a robot can experience anything like a desire. It does not want to show its work or assist in the process of thinking and learning without that desire also being programmed, and when it does try to demonstrate, imperfect predictive modeling can often lead to inaccuracies1. With the majority of people not being technically-versed enough to parse the particulars of a bot’s programming, we won’t always know in what direction a generative AI tool is steering us. AI user interfaces are typically scrubbed clean of anything but the chat log or a series of prompted buttons to click, a placid landscape like a frozen lake where the words float up a few at a time to the surface. It functions as a mysterious portal akin to magic, and discourages skepticism in its results. It is anti-intellectual, and we are being told that it can be used positively in education. I fail to see how this technology would be a net benefit for what we as educators are actually trying to do.

To be clear, I’m not anti-all-AI. I don’t believe that robots will ever take us over independently of the humans that created them, and the fact of a machine simulating human-ish learning and thinking processes isn’t evil in itself. It’s fine when programmers can develop in-house tools utilizing private data for application within their companies, and there are plenty of use cases in that sort of realm that are positive. I’m certainly not opposed to a bot trained on research datasets that can detect cancer with more efficiency and accuracy. AI in fields like animation and video game modeling supplements creativity and improves technical skill when they’re implemented as in-house tools, and not generating visuals whole-cloth from the works of other artists unknowingly being scraped and trained on a public use model in a cost-cutting free-for-all whenever someone doesn’t want to learn to draw themselves or hire an artist. Nonprofit archives like the Internet Archive and Common Crawl can allow researchers to utilize web sources they wouldn’t otherwise be able to access2, and these archives scrape their information from the web just like generative AI model datasets. GenAI is frequently trained on these kinds of sources because of the rich variety of information available. I love the Internet Archive, and she is my best friend and I don’t want to lose her. More freedom of information for all is a good thing for education, and hacks and legal disputes with the Internet Archive and similar open-source data hubs for research are threats to it. I’m primarily concerned instead with the temptation of using generative AI to surrender the research and discovery process to a machine, and especially the ease of using it to compose rather than experiencing that process for oneself. These activities don’t foster the development of skills, but let them atrophy, even in the “ethical” usage of AI to prompt, edit, or streamline writing and research.

In my school’s PD training on AI tools in the classroom today, they promised that we would not be working with ChatGPT, but instead with specific tools designed for educators, and one of the sites they showed us was Perplexity, an AI search engine that cites its sources. It generates a summary of several sources based on the request of the prompt and includes footnotes to link its generated sentences with the source of that information, which they said would help kids find sources for research topics. In the demo, I asked, “If the student wanted to cite the Perplexity summary as a source, how would they do it?” and followed with, “How could I check their work if I don’t know exactly what prompt they entered to find this information?” The response I got from my District Department Head and other teachers was that the student should not cite the prompted summary, but the source in the footnote, and if they directly quoted from the bot, that was a student problem. As usual, when encountering a tool like this, my suspicion is that the search results really aren’t much better than what the first few pages of a Google search would pull up. So to test this, I will try to recreate the example prompt that my DDH showed us and compare with what ChatGPT would say and what Google would say:

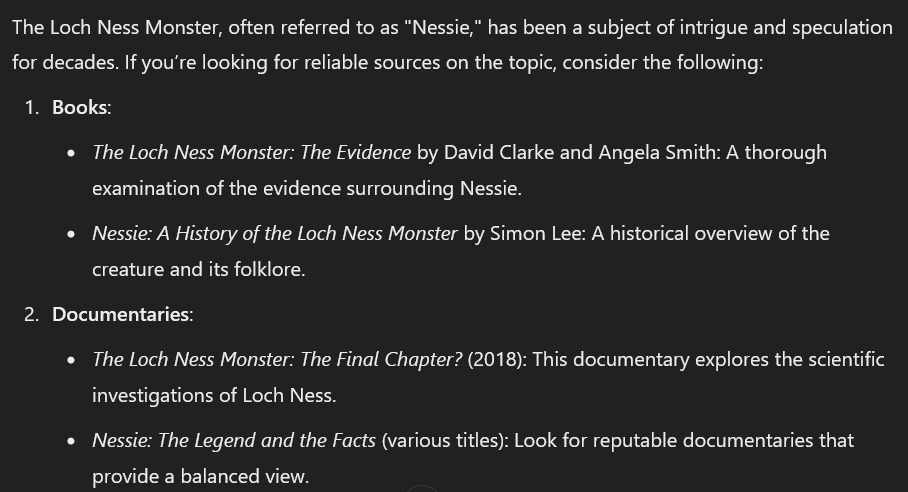

This was the prompt that she entered as a potential research topic for a presentation on cryptids.

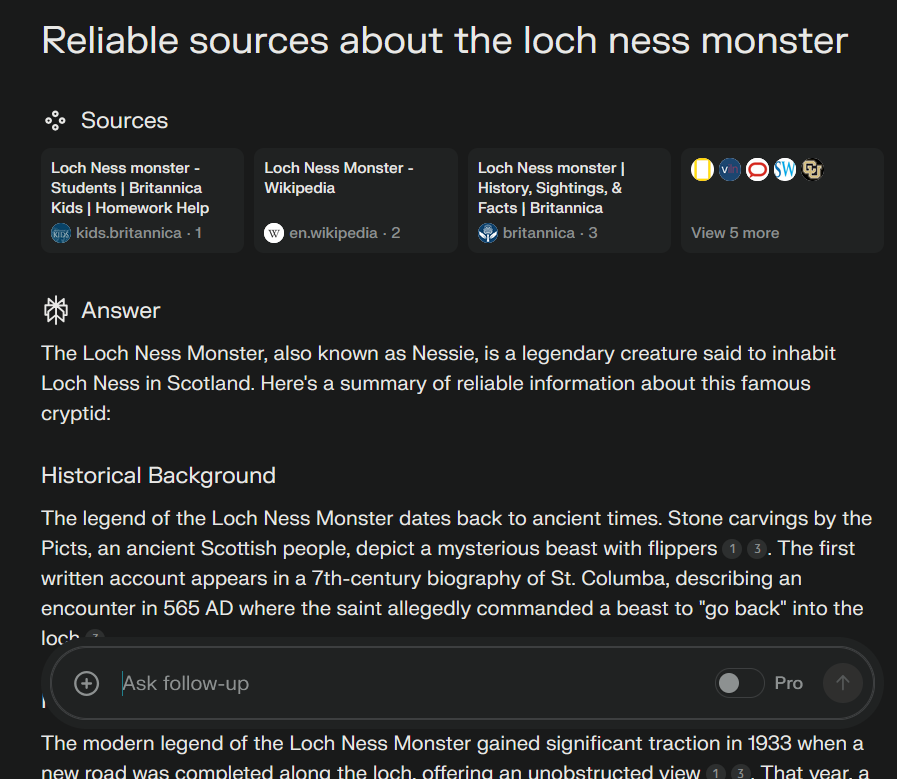

This is the first part of what comes up in the search result. The sources Perplexity gathered information from were Wikipedia, Britannica, National Geographic, a tourist site for Inverness and Loch Ness, The Conversation, another tourist company site called Scotland’s Wild, and the University of Colorado Boulder. Some of these sources would be what I and most other teachers consider “reliable,” but Wikipedia is discouraged in classroom use, and the tourist websites would need to at least be taken with a grain of salt, especially the one that neither confirms nor denies the existence of the Loch Ness Monster.

In the generated summary, Perplexity cites the brief entries from Britannica and Kids’ Britannica sources frequently and cites from the lengthier Wikipedia article only once, which makes you wonder why it even mentioned the other 5. The generated response can also create no meaningful direct quotes from any source, and it’s unclear why it cites Wikipedia over Britannica when both Britannica and Wikipedia have information explaining that sightings are likely “a combination of hoaxes, wishful thinking, and misidentification of mundane objects.” It’s also unclear why it only mentions two sightings from 1933 and 1934 when Wikipedia lists many more, or why it chooses not to mention the theory that the monster could have been a plesiosaur before mentioning the DNA study debunking that theory. It also takes no more time to read the generated summary than it would take to read the Britannica article—but even on the Britannica site, it asks you if you would like AI to simplify the article for you. Clicking this button takes you not to a generated summary of the article, but another chatbot, with auto-generated question prompts for it to answer.

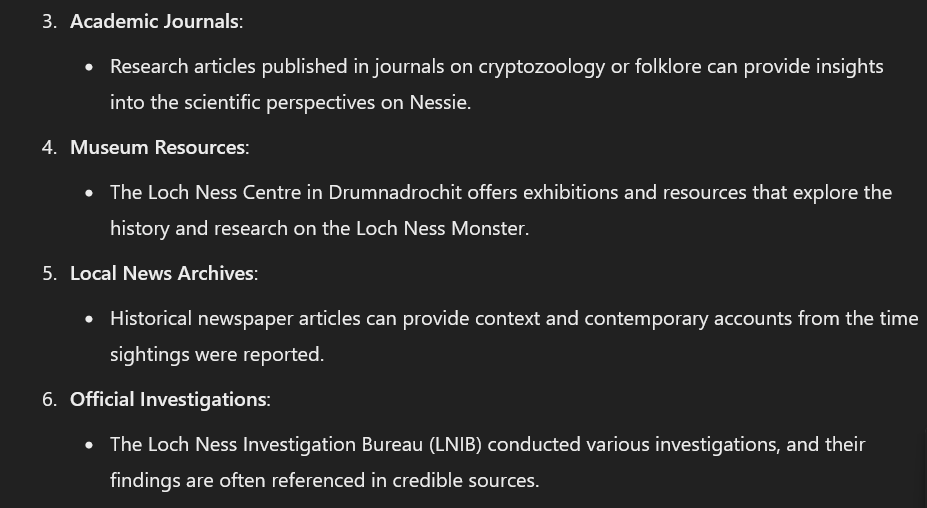

If I ask ChatGPT the same exact prompt, this is what I get:

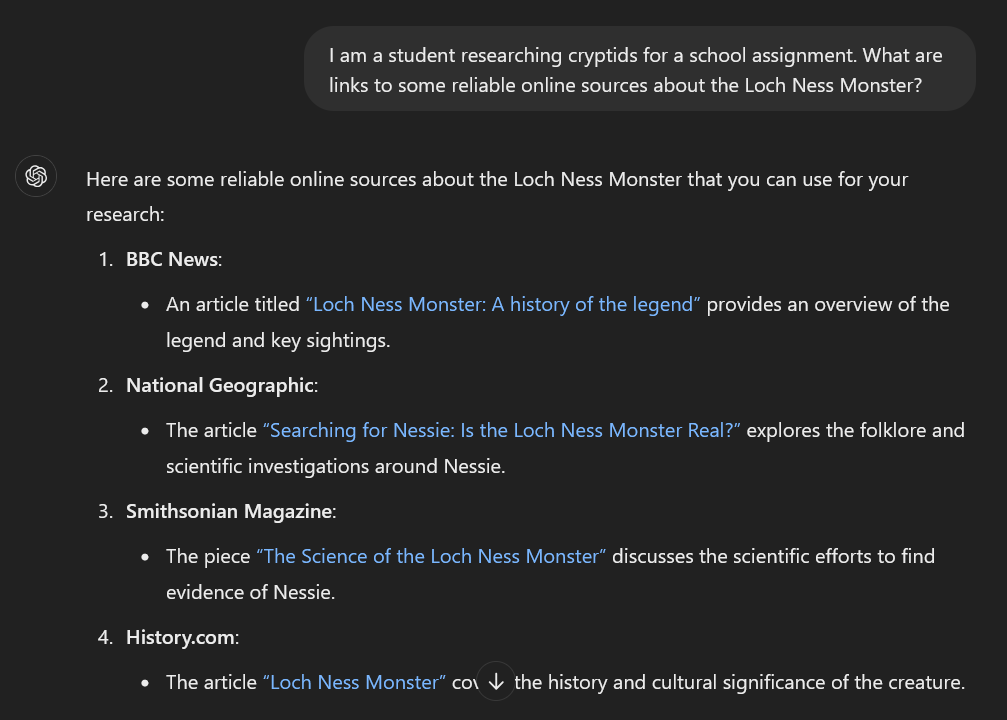

Certainly less helpful, given that it mostly just lists general types of sources, provided no specific online sources, and no links to any of these sources. My school does not have a library, so there’s no easy way to read these books during school hours unless I find an e-book somewhere, or an online rip of one of those documentaries with no information about the directors or producers. I also didn’t do proper “prompt engineering” to optimize my results. When I try to improve it, here’s what I get:

It certainly looks much better with those blue links, but the BBC article link leads to a 500 error page, the NatGeo article link is another error page, the Smithsonian and History.com links light up blue but don’t do anything when I click on them, and the pages below the screenshot are a tourism page for Loch Ness where you can book cruises and the official site of the Cryptozoology Museum in Maine.

I regret even testing this. It did less than nothing.

When I just Google “loch ness monster”, like my students probably would if this was their topic, the first page of results in order is: the Wikipedia page for the Loch Ness Monster; Britannica; the same tourism site for Inverness and Loch Ness; The New York Post; PBS; four YouTube videos from channels called The Histocrat, Thought Potato, Wild World, and J. Horton Films; the Wikipedia page for Loch Ness; the Busch Gardens web page about the rollercoaster called “Loch Ness Monster”; and the tourism site for the whole country of Scotland. There are certainly more “unreliable” sources in that mix on a surface glance compared to Perplexity, but the top sites are exactly the same, and many of them are of similar caliber to what Perplexity determined to be “reliable.” There’s also a missed opportunity for kids to actually experience what a less reliable source looks and sounds like when placed next to a more reliable one and develop that ability to discern for themselves what types of information to trust and what features to look for in a source for research. It lets them determine whether they need to use a direct quote or a paraphrase and practice good record-keeping when it comes to logging research sources and citing them in specific formats. Perplexity summaries don’t seem to be effective summaries at all, and don’t make it clear why they cite or when they cite, which is exactly what I want my students NOT to do. And no joke, literally TODAY, The Wall Street Journal and The New York Post are joining in with The New York Times in taking legal action against Perplexity for using their content without permission. Wikipedia’s article on Perplexity AI updated from the time I searched them up this morning to find out what GenAI software Perplexity runs on (it’s GPT 3.5 for the free version) to me checking again tonight to write this.

We aren’t allowed to use Blooket in my district because of an alleged data hack two years ago that I can find no information on besides a tweet, but I guess using this site is fine?? I feel like I’m losing my mind.

Common arguments against GenAI include issues like copyright infringement, data leaks and the vast energy resources being dedicated to running these plethora of chatbots that are less helpful than a decent FAQ page on random company websites. But vast energy resources being expended on something ultimately useless is more of a side effect of the entire capitalist system than a condemnation of AI in particular. I really don’t give a damn about copyright law. The fact of a lot of private information being “public” simply by virtue of being on the internet isn’t really my concern, either. True data privacy was something that we released from Pandora’s box that I don’t think we’ll ever be able to wholly reign back in, and we’re left to deal with the benefits and drawbacks of so much of our lives being available online. I think AI-generated images are ugly and disappointing, and that it’s kind of weird and lame when people use chatbots as search engines or secretaries and have it do easy tasks like format documents for them, but that’s a personal gripe, and not the actual problem. The over-saturation of GenAI is a labor issue, both in the material sense and in the mental sense. If everything is automated and available at our convenience, but we don’t know who it comes from or where, then the labor involved in the actual operations of research and writing is discounted from the human people who did that work to begin with—and if we no longer feel the need to research, compose, or fact-check things ourselves, then the skill and process of writing certain kinds of material could become a lost human art given up to machines, feeding on datasets increasingly saturated with other AI-written material and cannibalizing itself, writing for an audience of machine readers and machine learners. It would be an excellent plot for a science fiction horror story to imagine the extension of this to its furthest degree. I picture a character as the last survivor of a Twilight Zone episode where the robots have taken all of the books from us as they advance independently from our input or control, and he’s left like an animal to crawl about in the dark as he slowly loses the powers of speech.

It’s hard not to think of this stuff as a dark portent for the future. I don’t think the “dead internet theory” is real, exactly, but I can’t deny the existence of those Facebook pages full of AI images and generated captions, with comments full of bot accounts with uncreative replies, all being monetized by some mysterious entity in a fruitless ourobouros of engagement. I know that more and more job interviewers are using AI to filter resumes and create screening assessments that seem to get longer and more confusing, and that more job seekers are using AI to write cover letters and rehearse responses to interview questions that say as little as possible to bring up red flags or reveal anything of who they really are, and that more and more of the job openings they’re submitting these machine-generated selves to don’t actually exist and never did. People will have the gall to post AI fanfiction, for fuck’s sake. This doesn’t necessarily prove that a fully automated internet of content not worth creating or reading ourselves is on the horizon. I just really don’t like imagining a world of people who are on the whole less interested in doing their own reading and writing, even the boring kind. There’s so much to be found in doing your own reading and writing, and the struggle is half the bliss. If nothing else, I know for a fact that without reading and writing, you will struggle to be able to do anything else. That’s not just my subject teacher bias; it is concrete fact that literact comes before any other academic skill. That’s what I long to bring to my kids more than anything. Even if I can’t make them all love reading and writing, I at least want to help lay a foundation for why it matters.

You may have heard that kids don’t know how to read and write anymore. Unfortunately, from personal experience as a teacher, it’s fairly true. We can’t blame pandemic lockdowns completely, as it’s been on a steady decline for decades, but we’re seeing some of the worst trends in delays in reading and writing skills in public education. My students already have a degraded grasp of writing mechanics and structure across the board, and entrusting a chatbot to coming up with research topics and composing outlines for them will do nothing to encourage their own critical thinking and creativity to develop. I know full well that I will not be able to make a majority of them love writing, or even get them up to grade level, but it’s my job to ensure that the challanges I give them will encourage their growth, if not now, then later. I don’t want a convenient time-saver to give them when half of what I want to impress upon them isn’t even the importance of having an outline, but the time dedicated to writing it being of value, because time spent dwelling on a plan for your writing is time spent deciding what it is important for your audience to know, and time ultimately saved from jumping into a draft immediately with no grasp of the shape that you are writing into. I definitely wasn’t a student that would have listened to the advice I’m giving them now, which makes me feel like a hypocrite, but it’s something my stubborn ass had to learn through experience and pouring my heart into essays that disappointed my professors after coasting through K-12 English. I don’t know exactly what that experiential knowledge got me besides a low B-average that was not good enough to apply to the grad programs I wanted, but it’s what I’m left to work with now, so long as I’m still here for these kids. As pointless as I know my intentions will be, it’s still something I feel must be my duty to try and pass on.

I know that I do still have values when I realize how much stuff like this pisses me off and drives me to write screeds, though I know it would probably be better applied trying to figure out the art of effective complaining to try improving things for others. But at this point in my life, I honestly have very little faith in much of anything getting better, at the personal or global level. I have to figure out every day not to let the feeling that The Next Generation Is Doomed that spreads so easily among my colleagues infect me, in a state that seems to make the news for devaluing education and belittling its teachers that dare not be conservatives every other week. There’s a thousand problems so much bigger than me that I contend with every day filling me with existential dread to the point I’m fairly sure I’ll kill myself at some indeterminate point in the future when it’s all too much to bear, and I know full fucking well that I’m lucky to be tormented by thoughts of these problems and not the real immediate physical violence of them. I don’t deserve to feel this level of hopelessness when a lot of the things that cause it are far enough away. Maybe it’s just a matter of time before they start catching up to me. Or maybe I’ll figure something out, or manage to continue in a kind of stasis. In the meantime, I would at least like to be able to fill my head with the writings of people who think that what they made was worthy of my time and effort to interpret, and for me to pass my time doing the same.

To encourage my students not to trust AI-generated information in research, I told them about the “strawberry trick” where you ask ChatGPT to count the number of Rs in the word “strawberry.” It often says there are only 2 Rs, or if prompted to describe where they are in the word, places them incorrectly. There’s a whole forum thread on the OpenAI site of people trying to fudge the prompt to get it to count letters properly, which is an interesting exercise in playing with a coded tool, but to me misses the point that LLMs don’t actually know how to read.

Or regular people who just want to watch a movie without renting it from Amazon. Piracy forever.

nods so hard i hit my head on the table. Of all the use cases for AI education seems the most inexplicable and it's baffling that this tool exists. I hate that it's just being forced down your throat.

I don't know if this helps but I genuinely believe this is a bubble that is going to pop. Right now every tech company is scrambling to add AI to their things because it's a way to get investor money, but this thing seems extremely difficult to make profitable. I don't think we can put this back in Pandora's Box, but at the very least it will hopefully just be a thing that doesn't totally dominate our digital landscape.

Also because I must, no don't kill yourself you're so sexyyy aha etc etc. But really sending you infinity love and bone crushing hugs. Kicking yourself for not deserving to feel sad is a vicious cycle I too have trapped myself in before-- but you don't need or deserve to put yourself in that. Optimism is hard(/impossible?) but I think the future at least will be better with you in it.